While I was working on my grFailOver POC, I have also done some additional parallel testing. One of them was to see how online DDL are executed inside a Group Replication cluster.

The online DDL feature provides support for instant and in-place table alterations and concurrent DML. Checking the Group Replication (GR) official documentation I was trying to identify if any limitation exists, but the only thing I have found was:

"Concurrent DDL versus DML Operations. Concurrent data definition statements and data manipulation statements executing against the same object but on different servers is not supported when using multi-primary mode. During execution of Data Definition Language (DDL) statements on an object, executing concurrent Data Manipulation Language (DML) on the same object but on a different server instance has the risk of conflicting DDL executing on different instances not being detected."

This impacts only when you have a multi primary scenario, which is NOT recommended and not my case.

So in theory GR should be able to handle the online DDL without problems.

My scenario :

I have two DCs and I am going to do actions on my DC1 and see how it propagates all over, and what impact I will have.

The test

To do the test I will run and insert from select.

insert into windmills_test select null,uuid,millid,kwatts_s,date,location,active,time,strrecordtype from windmills7 limit 1;

And a select, on my Primary node gr1, while on another connection execute the ALTER:

ALTER TABLE windmills_test ADD INDEX idx_1 (`uuid`,`active`), ALGORITHM=INPLACE, LOCK=NONE;

As you may have noticed I am EXPLICITLY asking for INPLACE and lock NONE. So in case MySQL cannot satisfy it should exit and not execute the command.

In the meantime on all other nodes I will run a check command to see WHEN my Alter is taking place.

Let us roll the ball:

On my Primary the command to insert the data

[root@gr1 grtest]# while [ 1 = 1 ];do da=$(date +'%s.%3N');/opt/mysql_templates/mysql-8P/bin/mysql --defaults-file=./my.cnf -uroot -D windmills_s -e "insert into windmills_test select null,uuid,millid,kwatts_s,date,location,active,time,strrecordtype from windmills7 limit 1;" -e "select count(*) from windmills_s.windmills_test;" > /dev/null;db=$(date +'%s.%3N'); echo "$(echo "($db - $da)"|bc)";sleep 1;done

Again on Primary another session to execute the alter:

DC1-1(root@localhost) [windmills_s]>ALTER TABLE windmills_test ADD INDEX idx_1 (`uuid`,`active`), ALGORITHM=INPLACE, LOCK=NONE;

On other nodes to monitor when Alter will start:

while [ 1 = 1 ];do echo "$(date +'%T.%3N')";/opt/mysql_templates/mysql-8P/bin/mysql --defaults-file=./my.cnf -uroot -D windmills_s -e "show processlist;"|grep -i "alter";sleep 1;done

What happens:

Data is inserted by the loop.

Alter start, but I can still insert data in my table, and most important the data is propagated to all nodes of the DC1 cluster.

No alter action on the other nodes.

.559 .502 .446 .529 .543 .553 .533 .602 .458 <---- end of the alter locally

Once ALTER is complete on the local node (Primary) it is then executed (broadcast) to all the nodes participating in the cluster.

[ERROR 1205 (HY000) at line 1: Lock wait timeout exceeded; try restarting transaction <--- waiting for waiting for handler commit No INSERTS are allowed

But write are suspended waiting for:

37411 | root | localhost | windmills_s | Query | 19 | Waiting for table metadata lock | insert into windmills_test select null,uuid,millid,kwatts_s,date,location,active,time,strrecordtype

And eventually it will timeout.

The other point is that any write hangs until the slowest node had apply the ALTER:

It is important to note that all nodes, not only the PRIMARY remain pending waiting for the slow node:

The slowest drive all.

GR3:

11:01:28.649 48 system user windmills_s Query 171 altering table ALTER TABLE windmills_test ADD INDEX idx_1 (`uuid`,`active`), ALGORITHM=INPLACE, LOCK=NONE 11:01:29.674 48 system user windmills_s Query 172 waiting for handler commit ALTER TABLE windmills_test ADD INDEX idx_1 (`uuid`,`active`), ALGORITHM=INPLACE, LOCK=NONE

GR2

Start 11:00:14.438 18 system user windmills_s Query 97 altering table ALTER TABLE windmills_test ADD INDEX idx_1 (`uuid`,`active`), ALGORITHM=INPLACE, LOCK=NONE Ends 11:02:00.107 18 system user windmills_s Query 203 altering table ALTER TABLE windmills_test ADD INDEX idx_1 (`uuid`,`active`), ALGORITHM=INPLACE, LOCK=NONE

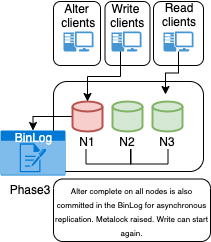

Finally when the last node in the GR cluster has applied the ALTER, the writes will resume, and the Replica node on DC2 will start its ALTER operation on PRIMARY first, then on the other nodes.

Summarizing

We can say we have 3 phases.

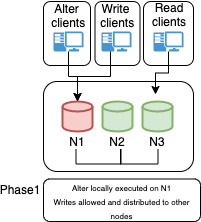

The first one is when the primary start to write and we have ONLINE operation:

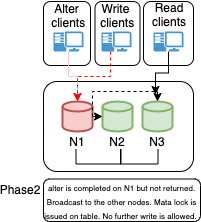

Phase 2: is when the Primary ends the local operation and transmit the changes to the Secondary nodes:

Here all writes are locked by metalock.

Phase 3: is when all nodes have finalized the operation, the Alter is passed over replication channel (async replica) and metalock is removed.

- Writes are executed on Primary

- ALTER is executed on the Primary

- DDL does not impact the write operation and respects the not blocking directive.

- ALTER is completed on Primary and passed to all nodes

- Meta lock is raised on nodes

- ALL cluster waits for slowest node to complete

- When all is done in the DC1 then the action is replicated to DC2

- Goto point 2

![]() BUT!!! If the primary goes down, the cluster is unable to elect a new primary until a Secondary had completed the operations. Meaning if alter takes 10 hours, we have a possible cluster without Primary for 10 hours. See also bug: https://bugs.mysql.com/bug.php?id=103421

BUT!!! If the primary goes down, the cluster is unable to elect a new primary until a Secondary had completed the operations. Meaning if alter takes 10 hours, we have a possible cluster without Primary for 10 hours. See also bug: https://bugs.mysql.com/bug.php?id=103421

Conclusion

It seems that at the moment we have a partial coverage of the online ddl feature when using group_replication. Of course to have to wait for the SECONDARY nodes is better and less impacting than to wait for PRIMARY first and then the SECONDARIES.

But is confusing given I was expecting to have either full online coverage (I had explicitly asked for that in the DDL command), or a message telling me it cannot be executed online.

Of course I would prefer to have FULL online coverage ;0)

Keep in mind my setup was also pretty standard and that changing group_replication_consistency does not affect the outcome. But not sure I can classify this as a bug, more an unexpected undesirable behavior.

Finally I cannot avoid to say that it seems to me a bit too much expect to have the users accepting to wait for two times the time of an alter, first on the primary then on the secondary nodes. I know we have PT-OSC to save the day, but I really think that native support should be better and allow the alter to start when it starts on the Primary, not after doubling the required time.